How Data Center Companies Could Navigate the AI Boom

Artificial Intelligence (AI) is the future, and is causing a boom in the data center industry. In this article, I explore how data center providers might monetize this trend.

The vision of Artificial Intelligence is to provide a human-like interface to traditional applications. Traditional applications have well-defined set of parameters in the form of algorithms which are used to produce results. For example, Amazon.com uses fixed algorithms to search and checkout products. The challenge with such applications is that they overwhelm users. Searching products in Amazon becomes mentally exhausting because for each result, we have to validate it is correct, it gets delivered on time, the reviews etc. For such cases, Generative AI can provide a human like interface that considers exponentially many parameters. Users have to ask for what they want, and AI will find the best output for them. Just like a personal assistant.

The bottleneck in AI development is availability of compute infrastructure leading to a boom in data center business. This has led to the following factors that colocation providers must consider:

- The compute requirement has significantly increased, but the exact capacity needed is not well-defined.

- Data Centers have to be customized towards new technologies such as special purpose chips, irregular training of Machine Learning/Foundational models, and hosting of heavyweight AI applications.

- Developers are assuming that compute should be readily available, and want to spend minimum energy on setting infrastructure.

The amount of data center capacity required has exponentially grown, but exact numbers are unknown. It is estimated that the amount of capacity built in the past ten years in the United States must be delivered in the next two years. This is causing significant strain in energy, cooling, capital, and real-estate. However, the exact capacity needed is unknown, since the financial aspects of Artificial Intelligence is an open-question. AI is an emerging technology, but how customers are adopting it is uncertain. If customer adoption is low and revenues cannot be generated, over-investment may lead to significant losses. At the same time, if AI turns out to be disruptive and a revenue generator, then if data center providers cannot meet capacity, it will lead to loss of trust.

Data Centers must be customized towards new technologies. The core objective of a data center is to provide real-estate necessary for compute, and Artificial Intelligence requires a lot of it. The compute requirements depend on two factors – efficiency and scalability. Efficiency refers to the amount of compute that can be done by each chip in the data center. To increase efficiency, special purpose chips have emerged from providers such as NVIDIA that require specialized cooling and energy. Scalability refers to the ability to increase compute instances. Customers may require a one-time training of foundational models that would lead to high-compute demand, but after training, their compute requirements may go down. Data Center providers must study the differences in customer’s compute requirements between training and hosting a model. If the difference is significant, a long-term demand of compute may go down once foundational models become mature.

Developers hate setting infrastructure. Developers want to code. They do not want to set up hardware. Most software engineers depend heavily on off-the-shelf compute infrastructure. If this infrastructure is not available, be it for general purpose applications, or Artificial Intelligence, it leads to significant loss of trust. Therefore, Data Center providers need to ensure the right infrastructure to allow developers to build applications. They must also ensure high-quality operations, and provide transparency to their customers on the facility’s operations.

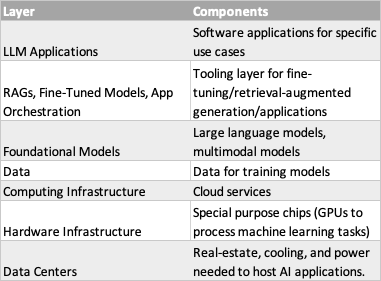

Layers of the Artificial Intelligence industry. Data Centers must be customized to enable all of the above layers. Source: MIT Sloan Management Review

How can data center providers satisfy customer requirements? To do so, they must a/ set up architecture to incorporate specialized AI technologies; b/ be able to dynamically scale customer’s compute requirement; c/ collect data on how customers use compute infrastructure and gain visibility on their Artificial Intelligence P&L and compute trends; d/ provide transparency to customers on operations, and provide mechanisms to let them scale their compute instances; e/ focus on fundamental financials and take calculated risks instead of overinvesting.

Data center providers should satisfy requirements towards new AI technologies. They need to proactively study and incorporate changes that could meet the requirements of all layers of AI as shown in the above diagram.

Providers should develop methods to dynamically scale compute workloads. There is a chance that AI might have a one-time compute load, which after maturity, could go down. It could be reasonable to bet on large capacity projects, but these projects must be set such that they can be scaled up and down without harming the financials. For example, if providers invest in a 1 GW facility, they should not build financial forecasts assuming the entire capacity will be loaded. Instead, they should be able to satisfy 1 GW, but the capacity needed to stay profitable should be much lower, like maybe 100 MW.

Providers should begin collecting data on AI trends. Data is king, especially during uncertain times. Data Center providers must begin collecting data on how customers use servers, the demands for chips, and their customer’s ability to survive in the AI industry. Data centers are the root of the AI supply-chain, and can have good visibility in the industry’s trends.

Data Center companies should provide transparency to customers on their workloads. Compute is constrained, and cloud computing companies are trying to optimize their existing workloads. They have been experimenting with networks, specialized hardware, cooling etc. Providers must allow easy access and modification to existing compute workloads.

Companies should focus on financials and avoid overinvesting. Data Center providers should not take huge risks in emerging technologies, especially when customer adoption is unknown. To minimize risks, providers should try to get customer commitments before investing in capacity, focus on low-cost energy through renewables, and secure financing for the long run to sustain themselves.